Is your AI solution headed for a performance wall that could derail your business?

Most organizations discover AI scalability limits only after hitting them, leading to service disruptions, unexpected costs, and stalled innovation. This guide reveals how to identify your AI’s scalability ceiling before you reach it, what metrics predict limitations, and how to architect systems that can grow with your needs.

Why AI Scalability Ceilings Matter

Scalability issues now rank as the #1 reason for AI project abandonment:

- 68% of enterprise AI projects fail to scale beyond initial pilot phase

- The average organization wastes $1.2M annually on AI solutions that hit unforeseen limits

- 73% of CIOs report having to replace AI systems prematurely due to scaling constraints

The Four Dimensions of AI Scalability Ceilings

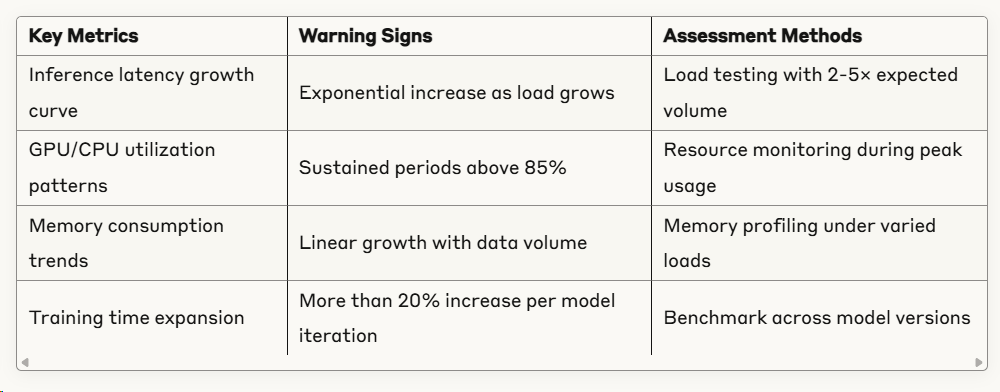

1. Computational Scalability

Definition: The system’s ability to handle increasing computational loads.

Case example: A retail recommendation engine showed 200ms latency at launch but exceeded 2,000ms within six months as the product catalog grew. Earlier ceiling assessment would have revealed the algorithm’s quadratic complexity, allowing for architectural changes before customer experience degraded.

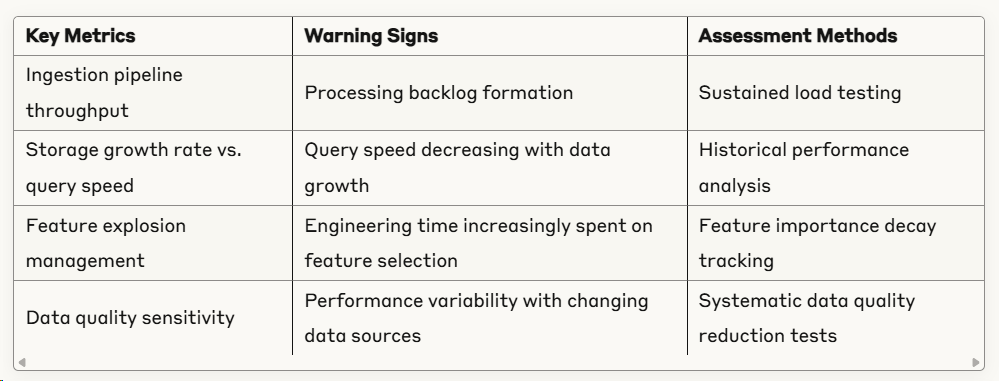

2. Data Scalability

Definition: The system’s capacity to process larger and more complex datasets.

Case example: A financial fraud detection system maintained 92% accuracy with 10M transactions but dropped to 76% at 100M transactions. Root cause: the feature engineering approach couldn’t handle the higher-dimensional space effectively at scale.

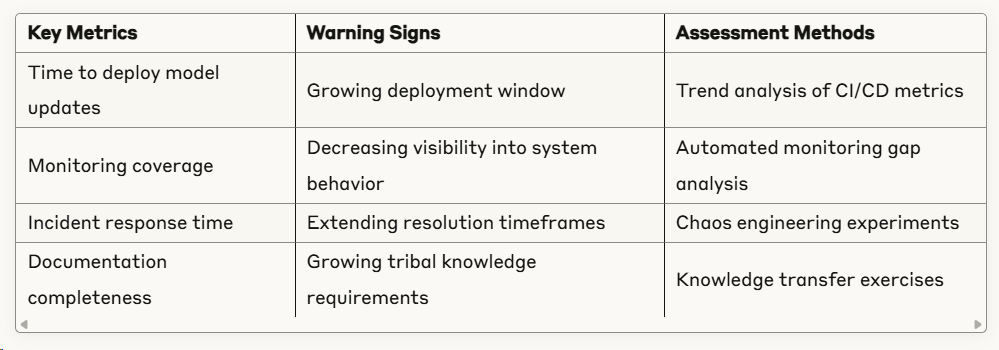

3. Operational Scalability

Definition: The ability to maintain, monitor, and govern the AI system as it grows.

Case example: A healthcare predictive system initially deployed updates in hours, but as the model complexity grew, the deployment window extended to weeks due to expanding validation requirements and regulatory checks.

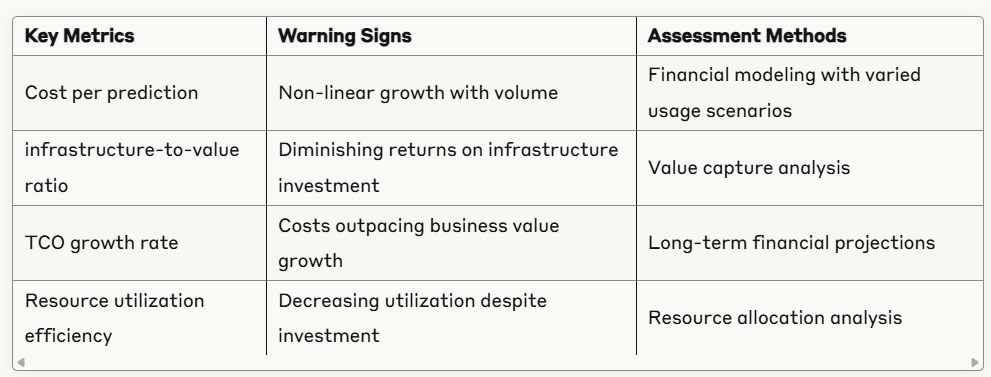

4. Economic Scalability

Definition: The relationship between system growth and total cost of ownership.

Case example: A marketing NLP solution cost $0.03 per analysis at launch but reached $0.15 per analysis within a year as complexity grew. The 5× cost increase exceeded budget allocations, forcing a premature replacement.

The Scalability Ceiling Assessment Process

Follow this 5-step process to identify your scalability limits before hitting them:

1. Establish Baseline Performance (2-3 weeks)

- Document current performance across all four dimensions

- Create detailed resource utilization profiles

- Establish performance expectation baselines

2. Define Growth Vectors (1-2 weeks)

- Identify expected growth dimensions (users, data volume, complexity)

- Quantify anticipated growth rates for each dimension

- Map dependencies between growth vectors

3. Conduct Progressive Load Testing (3-4 weeks)

- Execute incremental load increases (25%, 50%, 100%, 200%, 500%)

- Monitor non-linear performance degradation points

- Document resource saturation thresholds

4. Analyze Architectural Bottlenecks (2-3 weeks)

- Perform component-level ceiling analysis

- Identify architectural choke points

- Model component interdependencies

5. Calculate Theoretical Maximums (1-2 weeks)

- Determine mathematical upper bounds

- Compare theoretical limits to business requirements

- Establish runway before ceiling impact

Case Study: E-commerce Recommendation Engine

A mid-sized online retailer conducted a scalability ceiling assessment on their product recommendation AI:

Starting point:

- 50,000 active products

- 2M monthly active users

- 200ms average recommendation latency

- $0.02 cost per 1,000 recommendations

Assessment findings:

- Computational ceiling: Algorithm complexity would cause latency to exceed 1,000ms at 200,000 products

- Data ceiling: Model accuracy would degrade below acceptable levels at 5M users due to sparse data handling limitations

- Operational ceiling: Retraining pipeline would exceed 24-hour window at 300,000 products

- Economic ceiling: Cost per 1,000 recommendations would reach $0.08 at 8M users, exceeding budget allocations

Actions taken:

- Refactored recommendation algorithm to linear complexity version

- Implemented progressive feature selection to maintain model simplicity

- Rebuilt training pipeline with parallelization

- Adopted selective computation approach for highest-value recommendations

Results one year later:

- Successfully scaled to 250,000 products and 7M users

- Maintained sub-200ms latency

- Kept costs under $0.015 per 1,000 recommendations

- Avoided estimated $2.1M in emergency refactoring costs

Common AI Scalability Ceiling Indicators

Watch for these early warning signs that you’re approaching limits:

- Non-linear latency growth

- Performance degrades faster than data/user growth

- Investigate: Algorithm complexity, resource constraints

- Expanding maintenance windows

- Routine operations take progressively longer

- Investigate: Technical debt, process inefficiencies

- Diminishing accuracy returns

- More data/compute yields smaller improvements

- Investigate: Model architecture limitations, feature saturation

- Accelerating infrastructure costs

- Resource needs grow faster than capability

- Investigate: Inefficient resource utilization, architectural flaws

- Growing exception handling

- Edge cases consume increasing development time

- Investigate: Fundamental design limitations, scope creep

Strategic Responses to Approaching Ceilings

When assessment reveals approaching limits, consider these responses:

Architectural Adaptation

- Implement sharding strategies for data distribution

- Adopt microservices to isolate scaling bottlenecks

- Convert batch processes to streaming where possible

Algorithmic Optimization

- Switch to linear or sub-linear complexity algorithms

- Implement approximate computing for non-critical paths

- Adopt progressive computation approaches

Infrastructure Evolution

- Implement auto-scaling with predictive capacity planning

- Adopt specialized hardware for performance-critical components

- Consider hybrid cloud strategies for burst capacity

Economic Realignment

- Implement tiered service levels based on value

- Adopt pay-for-performance vendor arrangements

- Develop differential serving strategies based on business impact

Getting Started: Your Scalability Ceiling Assessment Checklist

Ready to assess your AI’s scalability limits? Start with these steps:

- Document your growth projections

- How many users in 6, 12, and 24 months?

- How much data will you process at each stage?

- What performance expectations must be maintained?

- Profile your current system

- Establish performance baselines across all four dimensions

- Identify current resource utilization patterns

- Document existing bottlenecks

- Design your testing approach

- Create progressive load testing scenarios

- Establish monitoring across all system components

- Define clear performance thresholds

- Build your assessment team

- Include ML engineers, infrastructure specialists, and business stakeholders

- Assign clear responsibilities for each assessment component

- Schedule regular progress reviews

The organizations that thrive with AI at scale aren’t those with the biggest budgets or the most advanced algorithms. They’re the ones that systematically identify and address scaling limitations before they become business problems. A proper scalability ceiling assessment isn’t just a technical exercise—it’s a business necessity for sustainable AI success.

Unlock your AI Edge — Free Content Creation Checklist

Get the exact AI-powered process to 10X your content output — blogs, emails, videos, and more — in half the time.

No fluff. No spam. Just real results with AI.