AI systems make decisions affecting millions of lives daily – from loan approvals to medical diagnoses to hiring choices. Yet 84% of AI systems show measurable bias against certain demographic groups.

AI bias monitoring systems offer a solution by continuously tracking, measuring, and mitigating unfair patterns in AI behavior. These tools have become essential as organizations face increasing scrutiny over algorithmic fairness.

Let’s examine how these systems work, which solutions lead the market, and how to implement them effectively.

Understanding AI Bias: The Problem That Demands Monitoring

AI bias manifests in several distinct forms:

Types of Algorithmic Bias

- Sampling Bias: Training data doesn’t represent the population it serves

- Measurement Bias: Variables incorrectly measure what they claim to measure

- Association Bias: Algorithms learn and amplify existing social prejudices

- Automation Bias: Humans over-trust AI recommendations despite evidence of errors

- Confirmation Bias: Systems favor information confirming existing beliefs

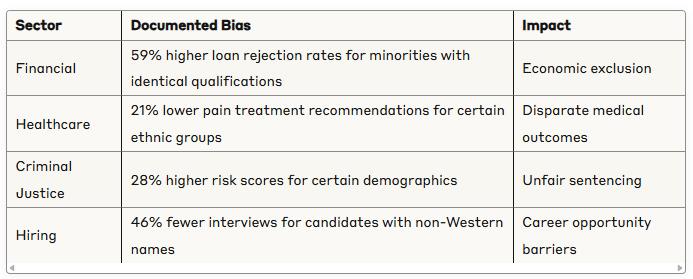

Real-World Consequences

Biased AI creates tangible harm:

These aren’t abstract concerns. In 2023, three major corporations paid settlements exceeding $25 million for AI bias-related damages.

Key Components of Effective Bias Monitoring Systems

Modern bias monitoring platforms contain several critical elements:

1. Comprehensive Metrics Dashboard

Effective dashboards track key fairness indicators:

- Statistical parity: Do outcomes match population demographics?

- Equal opportunity: Are false negative rates consistent across groups?

- Counterfactual fairness: Would decisions change if only protected attributes changed?

- Intersectional analysis: How do multiple demographic factors interact?

2. Continuous Testing Framework

Robust systems include:

- Pre-deployment testing: Evaluating models before release

- Production monitoring: Tracking live performance

- A/B comparison: Testing multiple models simultaneously

- Drift detection: Identifying when models diverge from baseline fairness

3. Remediation Tools

When bias is detected, these tools help:

- Reweighting algorithms: Adjusting for underrepresented groups

- Data augmentation: Expanding training data to address gaps

- Adversarial debiasing: Training models to resist learning biased patterns

- Human review triggers: Flagging high-risk decisions for manual evaluation

4. Documentation and Reporting

For accountability, systems should generate:

- Audit trails: Detailed records of model decisions

- Compliance reports: Documentation meeting regulatory requirements

- Stakeholder dashboards: Simplified views for non-technical teams

- Incident logs: Records of bias events and remediation actions

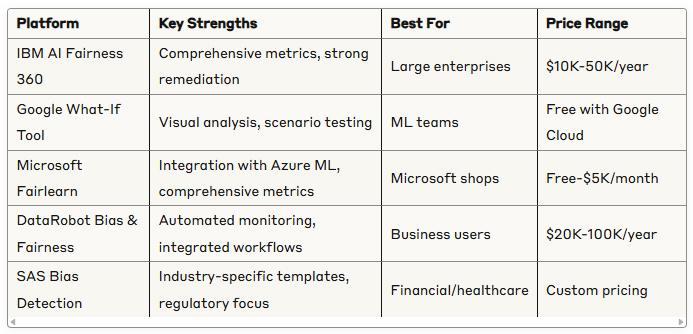

Leading Bias Monitoring Solutions

Enterprise Platforms

Open-Source Options

For organizations with technical resources:

- Aequitas

- Developed by University of Chicago

- Strong visualization components

- Focus on public policy applications

- FairML

- Feature importance analysis

- Identifies which inputs drive bias

- Python-based with simple API

- Themis-ML

- Multiple discrimination criteria

- Includes correction algorithms

- Well-documented for data scientists

- AI Explainability 360

- Companion to IBM’s Fairness 360

- Links bias to specific model features

- Comprehensive documentation

Implementation: A 5-Phase Approach

Phase 1: Assessment (2-3 Weeks)

- Inventory all AI systems and their potential impact

- Prioritize high-risk models for monitoring

- Define fairness criteria specific to each application

- Identify relevant protected attributes and fairness metrics

Phase 2: Tool Selection (2-4 Weeks)

- Evaluate monitoring solutions against requirements

- Consider integration with existing ML infrastructure

- Assess technical capabilities of implementation team

- Run small-scale pilot with historical data

Phase 3: Technical Integration (4-8 Weeks)

- Connect monitoring systems to model pipelines

- Configure alerts and thresholds

- Establish baseline performance metrics

- Create testing datasets representing diverse populations

Phase 4: Team Training (2-3 Weeks)

- Train data scientists on bias mitigation techniques

- Educate business stakeholders on interpreting metrics

- Develop clear escalation procedures for bias incidents

- Create cross-functional review processes

Phase 5: Operational Excellence (Ongoing)

- Conduct regular bias audits

- Update monitoring as models change

- Track remediation effectiveness

- Stay current with evolving fairness standards

Case Studies: Bias Monitoring in Action

Financial Services: Major US Bank

- Challenge: Credit models showed 17% approval rate disparity between demographic groups

- Solution: Implemented continuous monitoring with counterfactual testing

- Approach: Modified feature selection and weighting while maintaining accuracy

- Results: Reduced disparity to 3% while improving overall prediction accuracy by 2%

Healthcare: Regional Hospital System

- Challenge: Clinical decision support systems showed bias in treatment recommendations

- Solution: Deployed intersectional analysis tools to identify complex bias patterns

- Approach: Augmented training data and implemented fairness constraints

- Results: Achieved consistent care recommendations across all patient demographics

Hiring: Global Technology Company

- Challenge: Resume screening algorithm favored candidates from certain universities

- Solution: Implemented fairness monitoring with regular model retraining

- Approach: Created blind evaluation pathways and balanced training datasets

- Results: Increased workforce diversity by 34% while maintaining quality of hires

Common Challenges and Solutions

Technical Complexities

Challenge: Fairness metrics often conflict with each other and with accuracy goals. Solution: Define hierarchy of fairness priorities specific to each use case. Document tradeoff decisions.

Data Limitations

Challenge: Obtaining representative data for bias testing, especially for underrepresented groups. Solution: Use synthetic data generation, targeted data collection, and transfer learning techniques.

Organizational Resistance

Challenge: Teams fear reduced model performance or implementation delays. Solution: Start with education on reputation and regulatory risks. Demonstrate how fairness improves business outcomes.

Evolving Standards

Challenge: Bias definitions and measurements continue to evolve. Solution: Build flexible monitoring frameworks that can incorporate new metrics as they develop.

Future Developments in Bias Monitoring

The field is advancing rapidly with several emerging trends:

- Automated Debiasing: Systems that not only detect but automatically mitigate bias

- Federated Evaluation: Privacy-preserving techniques for testing bias across organizations

- Causal Analysis: Moving beyond correlation to understand root causes of algorithmic bias

- Regulatory Standards: Industry-specific requirements for bias monitoring and reporting

- Consumer Fairness Scores: Public metrics on AI fairness becoming competitive differentiators

Getting Started: Your First 30 Days

If you’re beginning your bias monitoring journey, focus on these initial steps:

- Identify your highest-risk AI systems

- Customer-facing applications

- Systems making significant decisions about individuals

- Applications using historically biased data

- Establish simple baseline metrics

- Start with statistical parity across key demographic groups

- Document current performance as your benchmark

- Set reasonable improvement targets

- Build cross-functional ownership

- Engage legal, ethics, and business teams

- Define clear responsibilities for monitoring and remediation

- Create executive dashboards for visibility

- Select a monitoring tool matched to your resources

- Limited technical resources? Choose managed solutions

- Strong ML team? Consider open-source frameworks

- Regulated industry? Prioritize documentation features

- Create a bias response playbook

- Define thresholds that trigger reviews

- Establish clear remediation procedures

- Document all bias-related decisions

The ROI of Bias Monitoring

Organizations implementing robust bias monitoring report:

- 42% reduction in compliance-related costs

- 27% improvement in customer trust metrics

- 38% decrease in model-related complaints

- 31% increase in model adoption by stakeholders

Beyond these metrics, the most significant benefit may be prevention of major bias incidents that can cause lasting reputational damage.

Final Thoughts

AI bias isn’t just an ethical issue—it’s a business risk, regulatory concern, and barrier to effective AI implementation. Monitoring systems provide the visibility and tools needed to build more fair and effective AI.

The question isn’t whether your organization can afford bias monitoring, but whether it can afford to operate without it.

What’s your next step toward fairer AI?

Unlock your AI Edge — Free Content Creation Checklist

Get the exact AI-powered process to 10X your content output — blogs, emails, videos, and more — in half the time.

No fluff. No spam. Just real results with AI.